Vision and Language Lab

The Vision and Language Lab (VLL) focuses on the intersection of artificial intelligence, software engineering, and natural language processing.

Our mission is to engineer intelligent systems that can understand, automate, and interact with complex information in a human-like manner.

Research Themes

Research in multi-modal natural language processing.

Advancing NLP for Arabic and its dialects.

Integrating vision and language for multimodal understanding.

Applying NLP techniques to robotics.

Latest News

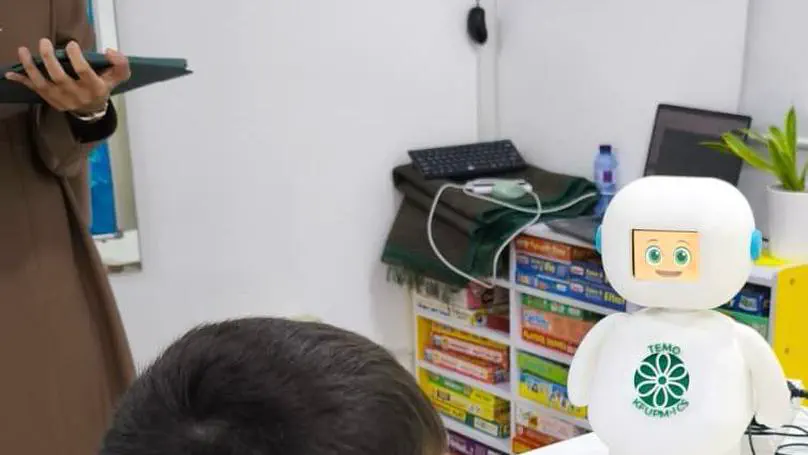

Over the past months, we have been developing TEMO (Therapeutic Emotional LLM-powered Robot), designed to support children with Autism Spectrum Disorder.

TEMO aims to make communication easier, more engaging, and emotionally supportive for children who often find traditional interaction challenging. It acts as a child-friendly assistant that is fine-tuned on an Applied Behavior Analysis dataset. With animated facial expressions, child-friendly dialogue, proactive speech, and a customized profile for each child, TEMO helps children practice communication skills, express emotions more comfortably, and feel safe, motivated, and understood.